Generative AI can be used in cybersecurity to automate threat detection, simulate advanced attack scenarios, enhance anomaly detection systems, generate synthetic data for training, and improve incident response workflows.

Organizations can proactively strengthen their security posture by leveraging machine learning models like GANs and large language models.

As cyber threats grow in complexity and volume, traditional security tools struggle to keep pace. Integrating generative AI into cybersecurity offers a paradigm shift, enabling proactive, adaptive, and highly automated defense mechanisms.

Tools like this Cybersecurity Risk Calculator can also support informed decision-making by helping assess risk levels based on threat likelihood, vulnerabilities, and asset value.

From generating synthetic datasets for model training to simulating zero-day exploits for red teaming, generative AI plays a critical role across multiple layers of the security stack.

This article examines how generative AI can be used in cybersecurity by focusing on its applications in threat modeling, behavioral analytics, intrusion detection, and automated response systems.

Understanding these capabilities is essential for security professionals to build resilient and intelligent cyber defense frameworks.

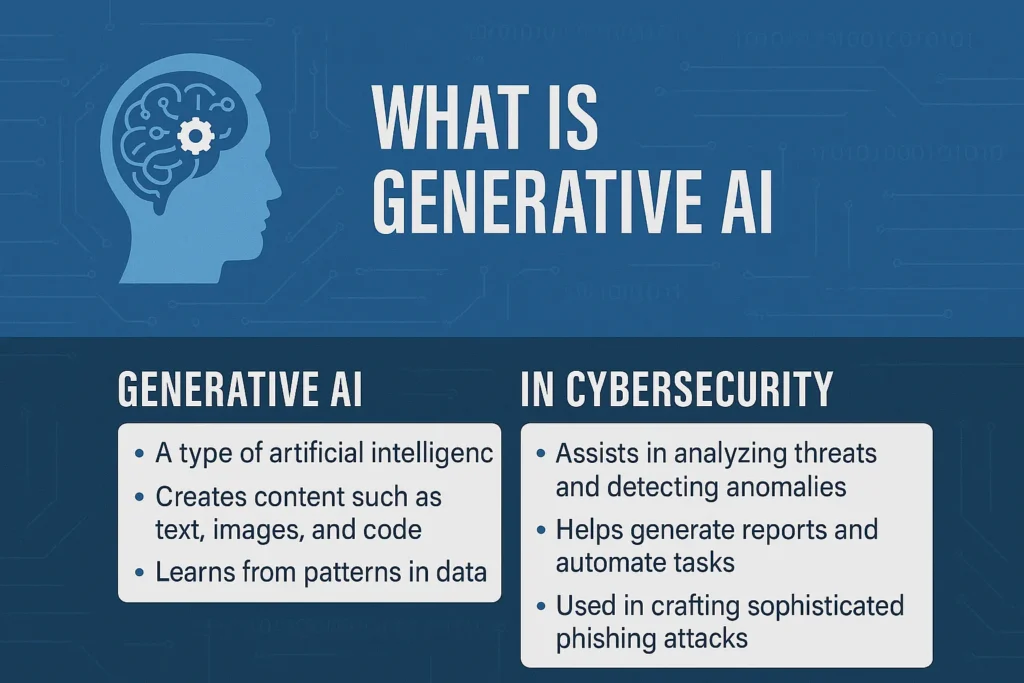

What Is Generative AI and Why Does It Matter in Cybersecurity?

Generative AI is a subset of artificial intelligence that uses deep learning models, such as Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), and Large Language Models (LLMs), to generate new data, simulate patterns, and predict outcomes based on existing datasets.

Unlike traditional AI, which primarily focuses on classification or detection, generative AI can create highly realistic content that mimics real-world behavior.

This ability is particularly valuable in cybersecurity, where detecting sophisticated threats requires dynamic, adaptive defenses.

Understanding how generative AI can be used in cybersecurity is critical for security professionals aiming to stay ahead of evolving attack vectors.

These models can simulate zero-day exploits, craft realistic phishing emails for red team exercises, and generate synthetic logs or traffic for training anomaly detection systems without compromising sensitive data.

Additionally, generative AI enhances incident response using natural language models to automatically summarize logs, prioritize alerts, and recommend mitigation steps.

As cyber threats become more complex and automated, generative AI offers a proactive, scalable, and intelligent approach to defending digital environments in real time.

Read More On: What Is GRC in Cybersecurity? Everything You Should Know

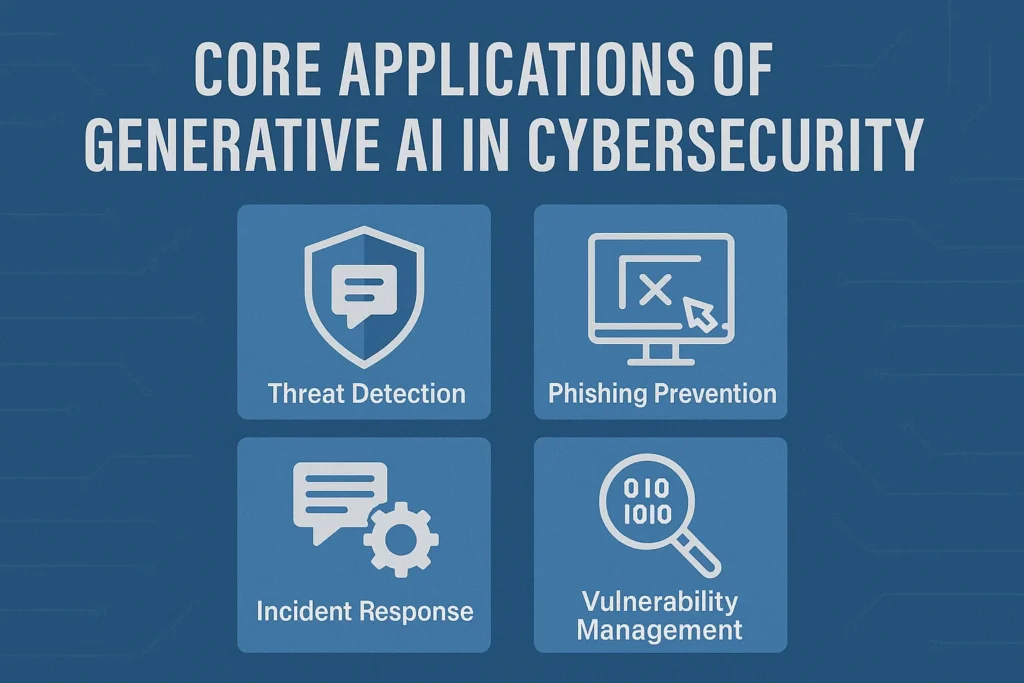

Core Applications of Generative AI in Cybersecurity

Generative AI has introduced groundbreaking capabilities in various domains, and cybersecurity is no exception. It’s transforming how security professionals detect threats, respond to incidents, and manage cyber risks.

For a deeper dive into how modern technologies like ZTNA and VPN complement these AI-driven defenses, check out The Role of ZTNA and VPN in Modern Cybersecurity Strategies.

Here’s a detailed breakdown of the core applications of Generative AI in cybersecurity, along with real-world examples to illustrate each one:

1. Threat Detection & Intelligence Generation

How It Works:

Generative AI models, huge language models (LLMs), can analyze vast volumes of structured and unstructured data (e.g., logs, threat reports, dark web chatter) to detect patterns and synthesize threat intelligence.

Examples:

- Threat Report Summarization: LLMs like GPT can read long threat reports and generate concise executive summaries or bullet points for SOC teams.

- Emerging Threat Pattern Detection: AI models generate hypotheses on potential attack vectors by analyzing underground forums’ chatter and combining it with known IOCs (Indicators of Compromise).

Real-World Use Case:

- Recorded Future and ThreatConnect use generative components to enhance their threat intelligence platforms by automatically generating readable threat insights from raw data.

2. Automated Incident Response Playbooks

How It Works:

Generative AI helps create, refine, and automate incident response (IR) playbooks. It can simulate decision-making paths or suggest next steps in response to detected threats.

Examples:

- AI-Generated Runbooks: When an alert is triggered (e.g., ransomware behavior), the AI proposes a customized response workflow based on severity, asset type, and current posture.

- SOAR Integration: Generative AI integrated with SOAR (Security Orchestration, Automation, and Response) tools like Cortex XSOAR can dynamically update or create response scripts.

3. Phishing Detection and Simulation

How It Works:

Generative AI models can mimic human-like writing, which attackers use to generate sophisticated phishing emails. Defenders can also use the same technology to detect or simulate these threats.

Examples:

- Detection: AI models analyze incoming emails and flag those with generative language patterns or indicators of social engineering.

- Simulation: Security teams use LLMs to create realistic phishing emails to test employee awareness in phishing campaigns.

Real-World Use Case:

- Cofense and KnowBe4 use generative AI to create custom phishing simulations tailored to specific industries and job roles.

4. Vulnerability Management & Patch Recommendations

How It Works:

Generative AI can assist in analyzing CVEs (Common Vulnerabilities and Exposures), prioritizing them based on exploitability, and even generating code suggestions for remediation.

Examples:

- AI-Augmented Patch Notes: The AI generates step-by-step patch instructions tailored to your infrastructure based on a vulnerability scan.

- Developer Assistance: AI tools like GitHub Copilot suggest secure coding practices to prevent vulnerable code during development.

5. Security Log & Alert Analysis

How It Works:

Generative AI can process and summarize large volumes of logs (e.g., from SIEMs like Splunk or Elastic) and highlight anomalies or threats.

This is especially useful when paired with advanced forensic tools that support deep log analysis and incident reconstruction. For a curated list of essential options, check out these Top 10 Cybersecurity Forensic Tools for Ethical Hackers in 2025.

Examples:

- Log Summarization: Instead of going through thousands of logs, analysts get daily AI-generated summaries with abnormal events highlighted.

- Alert Prioritization: AI filters false positives and enriches accurate alerts with contextual information.

Real-World Use Case:

- Exabeam integrates LLMs to enhance user and entity behavior analytics (UEBA), helping SOCs focus on high-risk anomalies.

6. Security Awareness Training

How It Works:

Generative AI can tailor cybersecurity training content based on user roles, behavior, and past quiz performance.

Examples:

- Customized Lessons: Employees receive adaptive, role-specific training materials generated on the fly.

- Conversational Simulations: AI chatbots simulate social engineering attacks to train users in real-time.

7. Natural Language Interfaces for Cyber Tools

How It Works:

Generative AI enables natural language querying of security data, making tools more accessible to non-technical users.

Examples:

- Instead of writing a complex query in Splunk, a user can ask, “Show me all failed logins from external IPs in the past 24 hours,” and the AI will translate that into the correct syntax.

Real-World Use Case:

- Elastic Security and Microsoft Security Copilot provide AI-driven search and analysis in plain English.

8. Red Team & Adversarial Simulation

How It Works:

Red teams use generative AI to simulate attacker behavior more realistically, crafting social engineering content, malware code snippets, and novel attack chains.

Examples:

- Adversarial Content Generation: Generative AI creates polymorphic malware or phishing pages for use in controlled environments.

- AI vs. AI Defense Testing: Defenders use generative AI to simulate novel attacks, which are then used to train AI-based detection systems.

9. Compliance & Risk Reporting

How It Works:

Compliance teams use generative AI to draft policies, assess risk frameworks, and generate compliance documentation.

Examples:

- NIST/ISO Gap Analysis Reports: Automatically generated based on answers to security questionnaires.

- Policy Drafting: AI creates or updates security policies aligned with specific standards like ISO 27001 or HIPAA.

10. Malware Reverse Engineering Support

How It Works:

While it doesn’t replace skilled reverse engineers, generative AI can help interpret assembly code, explain malware behavior, and translate binary instructions into higher-level explanations.

Examples:

- AI tools assist in describing code functionality, API calls, and system modifications made by unknown binaries.

Summary Table:

| Application | Description | Example Tool/Use Case |

| Threat Intelligence Generation | Summarizing & synthesizing threat data | Recorded Future |

| Automated IR Playbooks | Generate response workflows | Cortex XSOAR |

| Phishing Simulation & Detection | Detect or simulate AI-generated phishing | KnowBe4, Cofense |

| Vulnerability Patch Suggestions | Recommend fixes based on CVEs | GitHub Copilot |

| Log Analysis & Alert Triage | Summarize and prioritize alerts | Exabeam, Splunk |

| Security Awareness Training | Tailor content and simulate attacks | Custom AI chatbots |

| Natural Language Security Querying | Plain language interfaces for SIEM | Microsoft Security Copilot |

| Red Team Simulation | Realistic AI-driven attack scenarios | Custom Red Team Tools |

| Compliance Automation | Draft policies and risk reports | Internal AI tools |

| Reverse Engineering Support | Explain malware code behaviors | Reverse engineering AI |

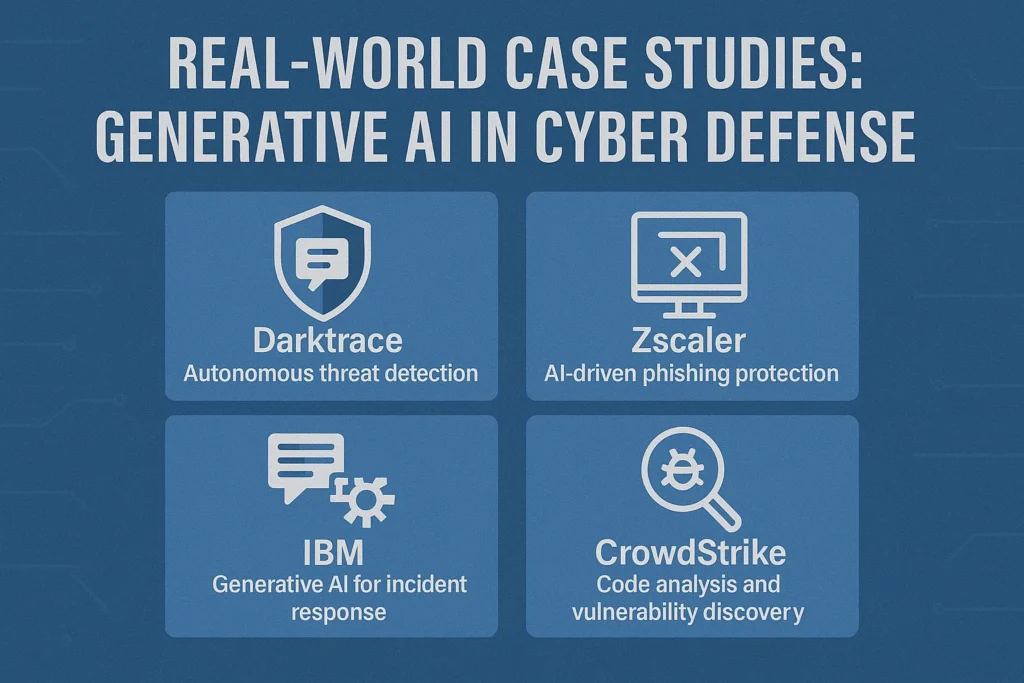

Real-World Case Studies: Generative AI in Cyber Defense

How can generative AI be used in cybersecurity? The answer is already unfolding in real-world environments where advanced AI models transform how organizations detect, investigate, and respond to cyber threats.

From enhancing SIEM use cases in 5G environments to analyzing threat intelligence gathered from dark web search engines and alternative tools like NotEvil, AI plays a pivotal role in modern cyber defense.

No longer limited to research labs, generative AI is now integrated into production-level security tools across Fortune 500 companies and government agencies.

It also helps raise awareness through public-facing content, such as curated cybersecurity YouTube channels. It can provide critical insight into user behavior, assisting individuals in avoiding high-risk actions like those discussed in this breakdown of the most significant personal cybersecurity risks.

These case studies highlight how generative AI is reshaping cyber defense strategies at scale:

1. Microsoft Security Copilot: AI for Threat Investigation

Company: Microsoft

Application: AI-enhanced Security Operations (SecOps)

Technology: GPT-powered Natural Language Processing

Details:

Microsoft launched Security Copilot, an AI assistant integrated with Microsoft Defender and Sentinel. It uses generative AI (based on OpenAI’s GPT models) to assist security analysts in interpreting alerts, summarizing incidents, and writing detection rules in natural language.

Key Outcomes:

- Reduced incident investigation time by 60–80%.

- Enabled faster creation of KQL queries via natural prompts.

- Enhanced analyst productivity with contextual threat summaries.

2. IBM Watsonx: Synthetic Data for Secure AI Training

Company: IBM

Application: Synthetic Data Generation for Privacy

Technology: Generative Models in Watsonx.ai

Details:

IBM’s Watsonx leverages generative AI to create synthetic datasets that closely mimic real-world data while preserving user privacy. This synthetic data trains machine learning models without violating data protection laws like GDPR and HIPAA.

Read More On: What Is Baiting in Cyber Security? Don’t Fall For It

Key Outcomes:

- Improved accuracy of threat prediction models.

- Protected sensitive user data in healthcare and finance.

- Reduced data access risks during model training.

3. Palo Alto Cortex XSIAM: Predicting Zero-Day Malware

Company: Palo Alto Networks

Application: Advanced Threat Prevention

Technology: Generative AI for Malware Variant Simulation

Details:

Palo Alto’s Cortex XSIAM uses generative models to simulate new malware and ransomware variants based on learned attacker behaviors. This proactive approach allows security systems to detect polymorphic and zero-day malware before it spreads.

Key Outcomes:

- Detected never-before-seen ransomware variants.

- Reduced time-to-containment by over 70%.

- Strengthened endpoint security through predictive modeling.

4. Darktrace Antigena: Autonomous Response Engine

Company: Darktrace

Application: Autonomous Threat Mitigation

Technology: Self-Learning Generative Models

Details:

Darktrace’s Antigena module uses AI to contain threats in real time automatically. It learns network behavior autonomously and uses generative techniques to predict attacker lateral movement, enabling split-second responses.

Case Study:

Antigena detected and neutralized a novel ransomware attack in a European law firm within 20 seconds, well before any human intervention.

Key Outcomes:

- Real-time autonomous remediation.

- Reduced false positives through behavior baselining.

- Minimized business disruption and downtime.

5. Google Chronicle AI: Big Data Meets GenAI

Company: Google Cloud

Application: Threat Intelligence & Telemetry Analysis

Technology: GenAI + Chronicle Security Operations

Details:

Chronicle leverages generative AI to analyze vast telemetry datasets, surface threat indicators, and assist security analysts with natural language queries. Its large-scale threat correlation helps identify previously undetectable anomalies.

Key Outcomes:

- Streamlined alert triage and prioritization.

- Enhanced signal-to-noise ratio for security teams.

- Language-based investigation automation.

These use cases demonstrate that generative AI in cybersecurity is about more than automation; it’s about intelligent augmentation, predictive defense, and autonomous decision-making.

These tools are actively helping organizations stay ahead of emerging threats, from enhancing SOC analyst workflows to generating synthetic data and simulating attacker strategies.

Read More On: Cyber Security vs Software Engineering: Code or Defend?

Benefits of Using Generative AI in Cybersecurity

The integration of generative AI into cybersecurity brings powerful capabilities that go beyond traditional rule-based systems.

By learning from vast datasets and simulating real-world attack patterns, generative models empower security teams to detect threats faster, respond more effectively, and predict vulnerabilities before they’re exploited.

Here are the core benefits of using generative AI in cybersecurity:

Faster Threat Detection and Triage

Generative AI enables real-time logs, alerts, and threat intel analysis by generating contextual summaries and patterns. Tools like Microsoft Security Copilot and Google Chronicle AI use natural language models to help analysts cut through alert fatigue and focus on actual incidents.

Why it matters: It reduces the Mean Time to Detect (MTTD) and allows SOC teams to prioritize critical threats more efficiently.

Read More On: How to Start a Cyber Security Company Off-Grid

Automated Incident Response

Platforms like Darktrace Antigena use generative models to contain or neutralize threats autonomously before human intervention. These systems simulate attacker behavior and respond based on predicted risk levels.

Why it matters: It shortens the Mean Time to Respond (MTTR), especially in high-speed attacks like ransomware or insider threats.

Synthetic Data for Safe Model Training

Generative AI can create synthetic datasets that mimic real-world network and user behavior without exposing personal or regulated data. This allows for safe training of machine learning models in sensitive environments like healthcare or finance.

Why it matters: Improves detection capabilities without compromising user privacy or regulatory compliance.

Predictive Threat Modeling

By learning from historical attacks, generative models can simulate new or emerging attack vectors even before they occur in the wild. This proactive defense model helps prepare systems for novel threats like polymorphic malware or unknown vulnerabilities.

Why it matters: Shifts cybersecurity from reactive to predictive, enhancing overall resilience.

Augmented Analyst Capabilities

Generative AI assists cybersecurity professionals by writing detection rules, summarizing threats, suggesting remediation steps, and generating reports. This augmentation reduces workload and skill gaps, especially in under-resourced SOCs.

Why it matters: Enhances human decision-making and helps junior analysts perform at a higher level.

Scalable Security Across Large Environments

Generative AI models can process and correlate signals across massive datasets from cloud workloads, endpoints, and networks, something human analysts can’t do in real time.

Why it matters: Ensures intelligent monitoring across hybrid and multi-cloud infrastructures.

Risks and Challenges of Applying Generative AI in Security

While generative AI offers powerful benefits, applying it to cybersecurity has risks. Misuse, overreliance, and lack of transparency can all undermine its effectiveness and even introduce new attack vectors.

- False Positives and Model Hallucination

Generative models, huge language models, can “hallucinate,” generating inaccurate or misleading information. This could result in incorrect alert summaries, misclassified threats, or faulty recommendations in cybersecurity. - Adversarial Use of Generative AI

Attackers can also use generative AI to craft compelling phishing emails, deepfake identities, or polymorphic malware that changes its signature to evade detection, escalating the threat landscape. - Model Exploitation and Poisoning

Generative models trained on unsecured or manipulated datasets can be poisoned, leading to inaccurate predictions or even backdoors being created within AI systems. - Data Privacy and Compliance Risks

Using sensitive data to train generative models, even unintentionally, can violate regulations like GDPR, HIPAA, or PCI-DSS if not properly anonymized or handled through synthetic datasets. - High Resource Costs and Talent Gaps

Implementing generative AI in cybersecurity requires computational resources and skilled personnel. Many small to mid-sized businesses may struggle with this investment or fail to operationalize the tools effectively.

Future of Generative AI in Cybersecurity Operations

Generative AI will continue to evolve from an assistive tool to a core component of automated security ecosystems. Some key trends shaping its future include:

- AI-Native SOCs

Security operations centers (SOCs) will become AI-native, with LLMs handling tier-1 triage, autonomous threat response, and continuous learning from incident data. - Real-Time AI Agents

Generative agents will simulate attack paths and coordinate live defenses, acting like autonomous teammates within incident response workflows. - Cross-Domain Intelligence Sharing

Federated and secure models will allow organizations to collaborate without data exposure, training AI across multiple enterprises for shared threat intelligence. - Explainable and Transparent AI Models

As regulatory and ethical standards mature, future generative models will need to be interpretable and offer explainable reasoning behind detections and decisions. - Defensive Use of Adversarial AI

Security teams will begin using generative adversarial techniques to stress-test their environments, proactively exposing weak spots before attackers find them.

How Organizations Can Start Using Generative AI in Cybersecurity

Adopting generative AI in cybersecurity doesn’t mean overhauling your entire security infrastructure overnight.

Organizations can take a phased and strategic approach to integrate generative AI tools that align with their existing SOC processes, compliance needs, and maturity levels.

Here’s a step-by-step guide to getting started:

1. Identify Use Cases Aligned with Pain Points

Start by assessing which parts of your security operations are most resource-constrained. Typical starting points include:

- Alert overload in the SOC

- Time-consuming incident investigations

- Manual writing of detection rules or reports

- Lack of skilled analysts for threat hunting

Pinpointing these will help determine whether you need analyst augmentation, autonomous response, or synthetic data generation.

2. Deploy Low-Risk Generative AI Tools First

Instead of immediately adopting high-stakes, autonomous response tools, begin with assistive AI applications like:

- Microsoft Security Copilot – helps summarize incidents and generate queries using natural language.

- Google Chronicle AI – enables analysts to interact with log data via plain-language questions.

- Open-source LLM integrations – fine-tune smaller models (e.g., LLaMA or Mistral) for internal alert triage or documentation.

This lowers operational risk while getting teams familiar with generative AI workflows.

3. Integrate Synthetic Data Generation

For organizations working with sensitive or regulated data (e.g., healthcare, finance, government), integrating synthetic data using platforms like IBM Watsonx can unlock the ability to:

- Train ML models safely.

- Simulate attack scenarios.

- Perform threat modeling in sandboxed environments.

Synthetic data generation also supports red-teaming without risking real systems or data exposure.

4. Pilot Autonomous Threat Detection Systems

Once your team is comfortable with assistive tools, consider testing predictive or autonomous platforms like:

- Palo Alto Cortex XSIAM – predictive threat simulation and malware variant generation

- Darktrace Antigena – real-time autonomous response to anomalies

- Vectra AI – generative AI-based detection of lateral movement in hybrid networks

Deploy in monitor-only mode initially to evaluate the tool’s decisions against human analyst workflows.

5. Establish Governance, Monitoring, and Human-in-the-Loop Oversight

To ensure trust, accountability, and safety, implement:

- Clear audit trails for every AI-driven action.

- Review loops where analysts validate AI recommendations.

- Bias checks and red teaming for model outputs.

- Access controls to prevent overuse or misuse.

Generative AI must augment human analysts, not replace them, mainly when decisions affect risk posture or compliance.

6. Upskill Your Security Team in AI Literacy

Equip your cybersecurity personnel with a foundational understanding of:

- How generative models are trained.

- Where and why they hallucinate.

- Prompt engineering and fine-tuning basics.

- Responsible AI and compliance implications.

This empowers your SOC to work with the AI, not around it.

Final Thoughts

The future of cyber defense will be increasingly defined by how effectively organizations adopt AI and, more specifically, how generative AI can be used in cybersecurity to defend and anticipate threats.

Generative AI offers a powerful toolkit for accelerating threat detection, generating synthetic training data, and automating response mechanisms.

However, success depends on thoughtful implementation, continuous oversight, and a well-trained team that understands this evolving technology’s strengths and risks.

Organizations that begin integrating generative AI today, even at a small scale, will be far better positioned to defend against tomorrow’s threats.

For in-depth insights, expert resources, and practical tools, explore more at CyberLad.io.

Frequently Asked Questions

What is the role of generative AI in enhancing threat intelligence and cybersecurity measures?

Generative AI plays a growing role in improving cybersecurity by enabling the creation of realistic threat models and simulated attacks. These models help organizations better understand potential vulnerabilities, anticipate adversarial behavior, and evaluate the effectiveness of their defenses. By generating synthetic data and predictive scenarios, generative AI supports proactive threat intelligence and enhances an organization’s ability to stay ahead of emerging cyber threats.

What is responsible AI in cybersecurity?

Responsible AI in cybersecurity refers to the ethical and secure use of AI technologies within defensive operations. According to Microsoft’s Responsible AI Standard, this involves building AI systems based on six key principles: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. These principles help ensure that AI-driven security solutions are trustworthy, unbiased, and aligned with both ethical standards and regulatory requirements.

Can AI replace cybersecurity professionals?

AI is not here to replace cybersecurity professionals but to enhance their capabilities. While AI can automate repetitive tasks such as log analysis or alert triage, human analysts are essential for strategic decision-making, ethical oversight, and complex incident response. The future of cybersecurity depends on a human-AI partnership, where both work together to improve efficiency and accuracy in threat detection and response.

What problems can generative AI solve in cybersecurity?

Generative AI can address several critical challenges in cybersecurity, including:

- Fraud detection through pattern analysis

- Threat simulation for red team/blue team exercises

- Automated content generation for phishing awareness training

- Synthetic data generation for safe model development

Beyond cybersecurity, it also drives innovation across sectors by helping companies ideate, create, and experiment with new solutions.

How can AI be a security threat itself?

While AI strengthens cyber defenses, it also introduces new risks. One primary concern is adversarial attacks, where malicious actors manipulate input data to trick AI models into making incorrect decisions, such as misidentifying malware or allowing unauthorized access. This highlights the need for robust AI governance, secure training data, and ongoing model validation as part of any AI-infused security architecture.

How can generative AI help in cybersecurity threat detection?

Generative AI models can recognize complex attack patterns, predict potential threats, and suggest real-time mitigation strategies based on historical and simulated data.

What are examples of generative AI in cybersecurity?

Tools like Microsoft Security Copilot, IBM Watson for Cybersecurity, and Darktrace leverage generative AI for advanced threat analysis and incident response.

What are the risks of using generative AI in cybersecurity?

Generative AI can sometimes produce inaccurate results, face data poisoning attacks, or be used by adversaries to automate new types of cyberattacks.