What Is CSAM in Cybersecurity?

CSAM in cybersecurity refers to Child Sexual Abuse Material, illegal content involving minors that may be found during digital investigations, malware analysis, or forensic audits.

It poses serious legal, ethical, and technical risks, making proper detection and handling a critical responsibility for cybersecurity professionals.

While often under-discussed, CSAM in cybersecurity is a growing concern as professionals encounter digital evidence that crosses legal and moral boundaries.

Whether during threat hunts, forensic recovery, or malware reverse engineering, the risk of stumbling upon CSAM is real, and knowing what to do next can be the difference between compliance and liability.

Where Cybersecurity Professionals Encounter CSAM

CSAM isn’t something cybersecurity teams actively seek out, but in today’s hyperconnected threat landscape, they increasingly encounter it in the digital wild.

Whether you’re reversing malware, digging into a compromised device, or scanning a company’s cloud storage, the risk of stumbling upon Child Sexual Abuse Material is real, and the implications are serious.

Here’s a breakdown of where CSAM typically appears in the cybersecurity ecosystem, what the exposure timeline looks like, and how teams are expected to respond:

1. Digital Forensics and Incident Response (DFIR)

Context:

Forensic analysts are often called in to analyze devices post-breach. While recovering deleted files, parsing disk images, or reconstructing file systems, they may uncover CSAM even when that wasn’t the focus of the investigation.

Timeline of Exposure:

- T0: Forensic image capture begins.

- T+1–2 hrs: Analysts start parsing file structures, uncovering hidden directories or encrypted containers.

- T+2–4 hrs: Suspicious files surface, flagged by hash matches (e.g., Project VIC database).

Real-World Case:

A U.S.-based consulting firm was analyzing a compromised server involved in a ransomware event. During recovery, they found a stash of encrypted files that, once decrypted, contained CSAM.

The firm halted all analysis, documented the discovery, and escalated to federal authorities within 3 hours. The investigation led to a separate federal case unrelated to the breach.

Read More On: How Do Macros Pose A Cybersecurity Risk?

2. Malware Reverse Engineering

Context:

Certain malware families, especially RATs (Remote Access Trojans) and keyloggers, grant attackers full file system access, allowing them to upload or download illicit content to infected machines.

Analysts reversing these tools may find hardcoded URLs or payloads linked to CSAM distribution.

Exposure Triggers:

- Discovery of hidden directories during unpacking

- C2 server references to known CSAM distribution platforms

- Obfuscated image payloads embedded in trojanized software

Risk Timeline:

- T0–T+1 hr: Static and dynamic analysis performed

- T+2–3 hrs: Suspicious traffic identified

- T+4+ hrs: Dumped content reviewed, potential CSAM confirmed

Example:

A security researcher analyzing a cracked version of a popular remote desktop utility found that it silently downloaded encrypted zip files from a Tor hidden service.

Upon manual inspection, these included CSAM. The researcher contacted NCMEC and discontinued public analysis of the sample.

Read More On: Cybersecurity vs Web Development: Which Is Better?

3. Cloud Storage & Corporate SaaS Platforms

Context:

Corporate tools like Google Workspace, OneDrive, or Dropbox are often abused by employees or external attackers to store CSAM due to perceived privacy.

When cybersecurity teams audit storage usage, compliance violations, or data exfiltration, they may run into illicit files.

Detection Methods:

- DLP (Data Loss Prevention) rule violations

- File hash matching (PhotoDNA)

- Internal whistleblowers reporting unusual content

Timeline:

- T0: Audit or alert triggered by DLP or abnormal usage

- T+1–3 hrs: Manual review reveals CSAM indicators

- T+3+ hrs: HR/legal escalation and law enforcement notified

Data Point:

In 2022, Microsoft and Google collectively reported over 1 million CSAM detection incidents on their cloud platforms, some tied to enterprise accounts accessed by insiders.

Read More On: Which Of The Following Activities Poses The Greatest Personal Cybersecurity Risk?

4. Threat Intelligence & Dark Web Monitoring

Context:

CTI analysts scraping dark web forums, breach dumps, or Telegram channels may unintentionally pull CSAM into internal systems. This is especially risky when tools are set to ingest files blindly or download content for enrichment.

Exposure Timeline:

- T0: CTI bot scrapes a new forum post or dump

- T+1 hr: Data lands in internal storage or sandbox

- T+2–4 hrs: Analysts reviewing dumps detect CSAM content

Mitigation Strategy:

- Sandboxing tools should sanitize all inbound data

- Content scanning must be layered before storage

- Logs and access controls must be tightly monitored to see who sees what

Incident Example:

An EDR vendor was scraping Telegram breach channels when one archive included CSAM as part of a ransomware leak.

Though automated systems ingested the file, the vendor’s detection stack flagged it and prevented employee access. The content was reported to authorities, and the channel was taken down.

Read More On: Is Cybersecurity Oversaturated? Career Guide Inside

5. Insider Threats & Endpoint Investigations

Context:

Sometimes, the threat is internal. Insider threat investigations occasionally uncover employees possessing or distributing CSAM through corporate networks or on company devices. This triggers both legal escalation and potential company liability.

Detection Timeline:

- T0: Suspicious activity (e.g., encrypted USB use, file sharing flagged)

- T+1–2 hrs: Endpoint analysis begins

- T+3–6 hrs: CSAM confirmed, systems isolated, authorities contacted

Notable Risk:

Employees found with CSAM on company systems often lead to both criminal charges and civil suits against the company for failing to prevent or detect abuse earlier, especially in regulated industries like healthcare or education.

Legal & Ethical Minefield

Encountering CSAM in a cybersecurity context isn’t just an uncomfortable moment; it’s a legal and ethical bombshell. The penalties for mishandling it can be as severe as those for creating or distributing it.

And while laws are often clear-cut in intent, the realities of forensic work, malware analysis, and data collection blur those lines fast.

The Legal Landscape

Handling CSAM even for investigative purposes is heavily criminalized across jurisdictions. Merely possessing or viewing it can be a felony in the U.S., UK, EU, and most other regions, even if unintentional.

Key Laws You Should Know:

| Region | Relevant Law | Penalty Range |

| U.S. | 18 U.S. Code § 2252 | 5–20 years for possession/distribution |

| UK | Protection of Children Act 1978, Sexual Offences Act 2003 | Up to life imprisonment for distribution |

| EU | Directive 2011/93/EU | Mandatory reporting, harmonized penalties |

| Canada | Criminal Code §163.1 | Up to 14 years for possession |

Warning: Intent doesn’t always matter. If you download, open, copy, or store CSAM even as part of your job, you could be held criminally liable without airtight documentation and immediate escalation.

Chain of Custody: A Legal Tightrope

If CSAM is discovered during a forensic or investigative process, it immediately becomes evidence of a separate crime. This puts you at the center of a criminal investigation, potentially as a witness, or worse, as a suspect if the chain of custody is broken.

Legal Protocol Timeline:

| Time | Action |

| T0 | Detection by analyst/tool |

| T+0–1 hr | Immediately stop analysis and isolate the file/system |

| T+1–2 hrs | Report up the chain (CISO, legal, HR) |

| T+2–4 hrs | Notify law enforcement / national reporting body (e.g. NCMEC, Internet Watch Foundation) |

| T+4+ hrs | Ensure logs, hashes, access controls are preserved for investigation |

Failing to report promptly or attempting to analyze further can invalidate evidence and expose your organization to criminal and civil liability.

The Ethical Dilemma: Between Duty and Danger

CSAM in cybersecurity creates a no-win ethical scenario:

- Do you open the suspicious file to confirm it?

- Do you hash-match every suspicious image or only those flagged by AI?

- What if AI makes a false positive and flags innocent family photos?

- What if a forensic contractor finds CSAM but doesn’t report it?

These questions don’t have easy answers, but they must be pre-answered in your organization’s IR and legal response playbook. Waiting until you’re in the moment is a risk you can’t afford.

Ethical Reality: Analysts often face psychological trauma, fear of legal reprisal, or hesitation to report for fear of being implicated. Ethical training, psychological support, and clear SOPs are as essential as firewalls and SIEM logs.

Use Our Cybersecurity Risk Calculator

False Positives: A Dangerous Gray Zone

AI tools like PhotoDNA, Project Arachnid, and Google’s CSAI Match are powerful but not perfect. Mistaking a false positive (e.g., medical imagery, art, family photos) for CSAM can lead to:

- Wrongful reporting

- Legal blowback

- Damaged reputations

Recommendation: Use AI as a first-layer filter but always escalate to human-verified, law-enforcement-guided confirmation. Never store or analyze suspicious content locally without legal clearance.

The legal and ethical terrain around CSAM is brutal. Missteps don’t just cost careers; they invite prison time. If you operate in digital forensics, malware analysis, or threat intel, you must have:

- Clear SOPs for CSAM discovery

- A zero-delay escalation protocol

- Documentation of actions taken

- Training for legal risk and psychological safety

CSAM is the kind of threat that makes even seasoned analysts freeze. The best defense? Knowing exactly what to do before it happens.

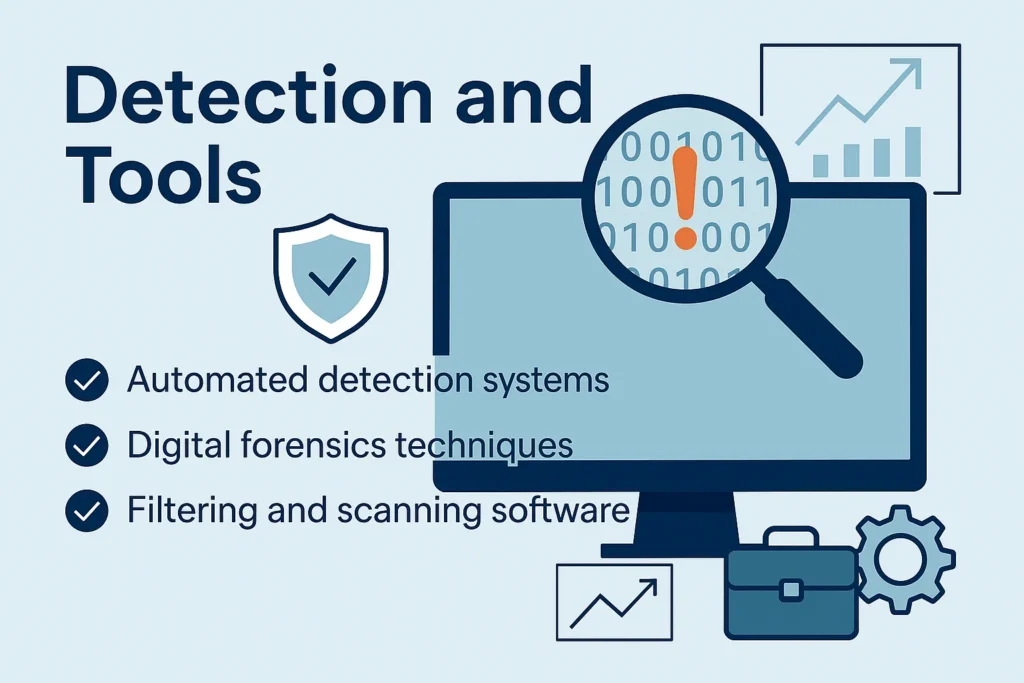

Detection and Tools

Detecting CSAM (Child Sexual Abuse Material) in cybersecurity workflows is a technical, legal, and moral minefield. You can’t afford false negatives, but false positives can be almost as damaging.

The tools available today walk a razor’s edge between efficacy and overreach. Here’s how cybersecurity teams approach CSAM detection without crossing legal or ethical lines.

1. Hash-Based Matching (PhotoDNA, Project VIC)

How it works:

These systems use known cryptographic hashes (MD5, SHA-1, SHA-256) of CSAM images/videos to detect duplicates or known patterns. Tools compare scanned content against curated CSAM hash databases.

Popular Tools:

- PhotoDNA (Microsoft/NCMEC) – Converts images into hash signatures using pixel values, not raw file content

- Project VIC – Law enforcement-focused CSAM database used in digital forensics

- HCCE (Hash-based Child Exploitation Evidence) – NIST-supported hash set for forensic tools

Advantages:

- Fast and legally vetted

- Low false positives (only known CSAM is flagged)

Limitations:

- Cannot detect new/unhashed content

- Easily bypassed by minor file alterations (cropping, compression, encryption)

2. AI-Powered Content Analysis

How it works:

Machine learning models scan images and videos for visual cues of explicit content, even if hashes don’t match. These models often use convolutional neural networks trained on illegal and borderline imagery.

Key Tools:

- Google CSAI Match – Used on YouTube to scan for abusive material

- Thorn’s Safer – ML-powered platform for social platforms and hosting providers

- Facebook’s AI Matching – Proprietary models used for content moderation and CSAM flagging

Pros:

- Detects new, uncatalogued CSAM

- Operates at scale across cloud systems

Cons:

- High false-positive risk

- Often black-box and not explainable

- Cannot be relied on solely for legal action

Warning: AI-only flags should never be manually reviewed by cybersecurity staff without legal clearance. AI can lead you to the edge of a felony without warning.

3. Enterprise DLP & File Monitoring Tools

Context:

Many organizations deploy Data Loss Prevention (DLP) systems to flag inappropriate content in emails, uploads, or file shares. These systems often support custom rulesets to detect base64-encoded images, suspicious filenames, or known CSAM hashes.

Example Solutions:

- Symantec DLP

- Microsoft Purview

- Forcepoint

- Code42 Incydr (Insider Risk Detection)

Limitations:

- Not designed specifically for CSAM

- Risk of alert fatigue or misclassification

- Must be tuned to avoid legal overreach

4. Dark Web and Threat Intel Monitoring

Use Case:

Security teams monitoring criminal forums, ransomware dumps, or breach data often use automated scraping tools to ingest files or archives. These are scanned using:

- VirusTotal intelligence filters

- YARA rules targeting file patterns

- Sandboxing tools (e.g. Cuckoo, Joe Sandbox)

Mitigation Layer:

- Block download of images/video unless explicitly whitelisted

- Use external sandboxes to quarantine suspicious archives

- Implement auto-delete rules for flagged content

Operational Best Practice: Always run threat intel ingestion in isolated, legally compliant environments with auto-redaction and reporting triggers.

5. Proactive Risk Controls and Policies

Detection is only half the game; what you do afterward matters more.

Must-Have Safeguards:

- Mandatory content scanning before human review

- Role-based access: Only legal or HR teams can access flagged files

- Secure logging of all detection events (with no content exposure)

- Immediate escalation playbooks that outline:

- Stop-analysis triggers

- Chain-of-custody handoff

- Reporting mechanisms (e.g., NCMEC, IWF, Interpol)

- Stop-analysis triggers

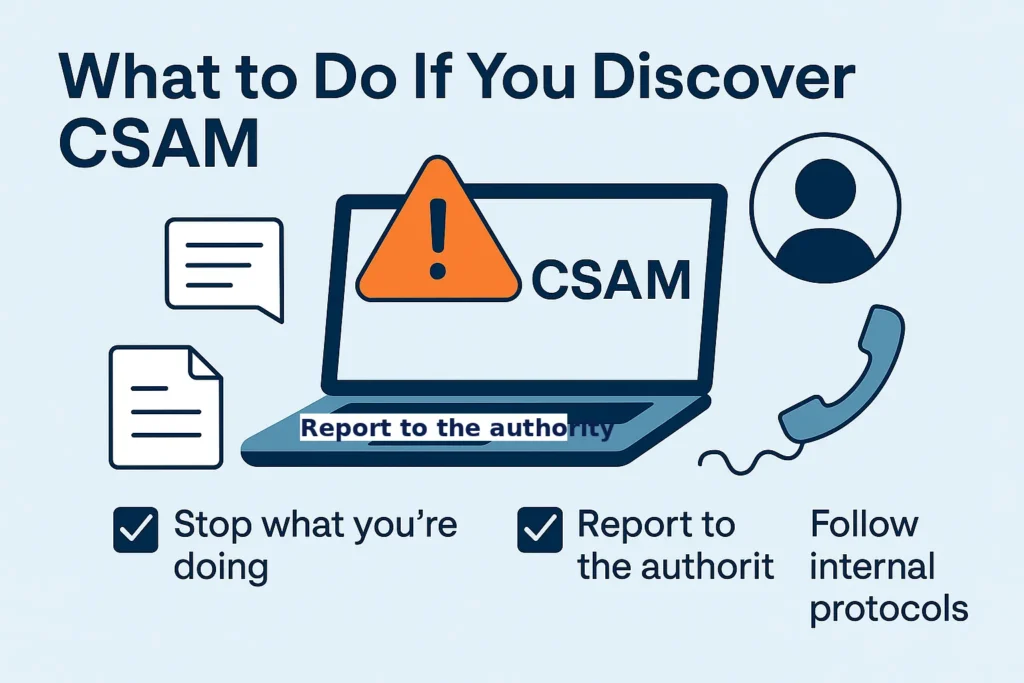

What to Do If You Discover CSAM

Finding CSAM (Child Sexual Abuse Material) on a system, whether in the course of digital forensics, malware analysis, or threat intelligence work, isn’t just a red flag. It’s a legal emergency.

Mishandling it for even a few minutes can destroy evidence, implicate the analyst, or result in criminal charges. Here’s the step-by-step response protocol every cybersecurity team must follow, no excuses.

1. Stop Everything Immediately

As soon as CSAM is suspected:

- Cease all analysis. Do not open, move, copy, rename, or scan the file.

- Isolate the system or drive. Disconnect from the network to prevent spread or tampering.

Why? In many jurisdictions, even temporary possession is a felony. Touch nothing until it’s escalated properly.

2. Report Internally to Your Chain of Command

Immediately notify your designated point of contact, usually the:

- CISO or Head of Security

- Legal counsel

- HR (if insider-related)

Do not discuss the contents of the file with unauthorized colleagues. Keep a written log of:

- Time of discovery

- File path/location

- Actions taken (e.g., stopped analysis, isolated system)

3. Preserve Evidence Without Accessing It

Maintaining chain of custody is critical. You must:

- Secure the system or storage device physically and digitally.

- Hash the file(s) using SHA-256 without opening them (if legal in your jurisdiction).

- Record all actions with timestamps and access controls.

Important: Never upload suspicious content to public sandboxes (e.g., VirusTotal). This could inadvertently distribute illegal content.

4. Notify Law Enforcement or Designated Authority

In most countries, reporting is legally mandatory, and delay is a crime in itself.

| Region | Report To |

| U.S. | NCMEC CyberTipline, FBI |

| UK | Internet Watch Foundation (IWF), NCA |

| EU | National CSAM reporting portals |

| Global | Interpol (for multinational orgs) |

Use secure channels. Do not email the files or content directly authorities will advise how to transfer evidence safely.

5. Escalate to Cybersecurity Incident Response Team (CSIRT)

Treat the discovery as a full-blown incident. Your CSIRT should:

- Validate logs and file hashes

- Prepare documentation for legal handoff

- Initiate an internal investigation (scope, origin, spread)

- Coordinate with law enforcement on next steps

If third-party tools or vendors were involved, notify them under NDA and legal supervision.

6. Protect the Analyst and the Org

The individual who discovered the content should be:

- Shielded from unnecessary exposure or blame

- Offered psychological support or trauma counseling

- Documented as a reporter, not a suspect, with full transparency

Real Talk: Analysts have faced criminal investigation or job loss due to poor internal protocols. Your org must protect its people as well as its systems.

7. Post-Incident Response & Policy Review

After the incident:

- Audit your detection and escalation protocols.

- Update your training materials to include CSAM response procedures.

- Conduct a retrospective to improve:

- Access control on shared files

- DLP rules

- Threat intel ingestion filters

- Access control on shared files

- Ensure that legal and HR are integrated into any future IR simulations involving CSAM risk.

If you encounter CSAM in your cybersecurity role, you’re not just handling files; you’re holding criminal evidence. The margin for error is zero. Follow this protocol:

- Stop immediately

- Report internally

- Preserve without touching

- Notify law enforcement

- Escalate to CSIRT

- Protect your staff

- Refine your process

In this space, speed, precision, and legality are everything. Handle it wrong, and your team could end up on the wrong side of the law.

Training & Prevention

The best way to deal with CSAM in cybersecurity? Avoid encountering it in the first place.

But since that’s not always possible, the next best move is training your people and hardening your systems to minimize exposure, protect staff, and ensure legal compliance when things go sideways.

Here’s how to build an organization that’s ready ethically, technically, and psychologically.

1. Train Analysts on CSAM Awareness and Protocols

Your SOC and DFIR teams must know:

- What CSAM is legally defined as

- Where it may appear in their work

- How to recognize early red flags

- Exactly what steps to take upon discovery

Training Methods:

- Quarterly tabletop exercises simulating CSAM discovery

- Mandatory onboarding modules on legal risk and reporting obligations

- Scenario-based learning using metadata, not content (hashes, file names, detection triggers)

Tip: Focus on behavioral pattern recognition (e.g., suspicious file clusters, P2P file types, encrypted zips with certain names) to avoid exposing teams to traumatic content during training.

2. Implement and Enforce a Written CSAM Response Policy

No one should be improvising. Publish a formal, legally-reviewed CSAM policy that includes:

- Legal references by jurisdiction

- Step-by-step incident response checklist

- Named contacts (internal and law enforcement)

- Chain-of-custody and logging procedures

Policy Must Include:

- Immediate stop-work directive upon discovery

- Reporting flowchart

- List of prohibited actions (opening, copying, uploading, etc.)

Make this policy part of:

- Employee handbooks

- Security team SOPs

- Vendor agreements and red-teaming contracts

3. Limit Exposure Through Role-Based Access Controls

Not every analyst needs access to flagged or suspicious content. Set up tiered access levels so only trained and legally cleared personnel can interact with high-risk data.

Recommendations:

- Use RBAC within forensic tools, SIEM, and cloud storage

- Flag sensitive detection results for escalation only

- Require multi-party access approval for viewing quarantined files

Best Practice: Create “quarantine sandboxes” where CSAM-suspected files are auto-flagged, hash-matched, and locked pending legal review. No analyst should be exposed by default.

Read More On: Top 10 SIEM Use Cases for 5G Security

4. Use CSAM-Aware Tools in the Workflow

Integrate detection systems that can filter or flag CSAM before it reaches human analysts:

- PhotoDNA or Project VIC for hash-based pre-filtering

- DLP tools tuned to block exfiltration and detect illicit media types

- Threat intel ingestion filters with auto-redaction of image-heavy dumps

- Email/Drive scanners that route matches to legal/HQ, not security teams

Caution: Avoid “DIY” scanning scripts that log or copy flagged files. Use vetted platforms that are legally compliant and log only hashes or metadata.

5. Provide Psychological Support and Ethical Debriefing

Encountering CSAM even momentarily can cause emotional trauma and long-term psychological damage. Your prevention model must include:

- Access to trauma-informed counselors

- Anonymous debriefing options

- Scheduled wellness check-ins after any CSAM event

- Shielding affected staff from further exposure

Reality Check: Some analysts never return to active investigation work after a CSAM exposure event. Your culture must prioritize mental health and ethical support as much as legal risk.

6. Audit and Simulate Regularly

CSAM isn’t static. New threats emerge as:

- Criminals use steganography or encryption to hide files

- Ransomware gangs dump data that includes CSAM

- Insider threats store illicit content on company systems

Your defense:

- Quarterly simulated CSAM discovery incidents

- External audits of your detection stack and reporting workflows

- Spot checks on access logs, file scans, and threat intel inputs

Case Studies & Incident Reports

To understand the gravity of CSAM in cybersecurity, look at what’s already happened in the field. These aren’t hypotheticals; they’re real-world incidents where organizations encountered CSAM in the wild. Each case reveals not just what went wrong (or right), but how quickly exposure can spiral into a legal and ethical crisis.

Case 1: Ransomware Victim’s Server Contained CSAM

Company: U.S. tech startup

Incident Type: Post-breach forensics

Timeline:

- T0: SOC team responds to ransomware breach on cloud-hosted server

- T+3 hrs: Forensic analyst recovers encrypted files for investigation

- T+6 hrs: Decrypted archive reveals multiple images flagged by PhotoDNA

- T+7 hrs: Team halts all access, notifies legal, contacts NCMEC

- T+24 hrs: Law enforcement takes over device and evidence custody

Outcome:

While the breach itself was unrelated to CSAM, the discovery triggered a parallel criminal investigation. The company was cleared, but the analysts involved were interviewed by federal agents and required trauma counseling.

Lesson:

Always treat breach environments as potential crime scenes, not just digital ones.

Case 2: CSAM Found in Shared Enterprise Cloud Folder

Company: European marketing agency

Incident Type: Internal audit via Microsoft 365 DLP

Timeline:

- T0: Scheduled audit flags a suspicious .zip file shared internally via OneDrive

- T+1 hr: Security team isolates file, hashes match known CSAM via Project VIC

- T+2 hrs: File traced to personal upload from a remote employee’s account

- T+5 hrs: HR, legal, and authorities are contacted; employee suspended

- T+3 days: Law enforcement confirms presence of CSAM on employee devices

Outcome:

The employee was arrested. The company avoided liability by having documented DLP policies and a clear escalation path.

Lesson:

Strong cloud monitoring and automated flagging systems can uncover deeply hidden threats even from insiders.

Case 3: Dark Web Threat Intel Feed Contaminated with CSAM

Company: Cybersecurity vendor scraping breach forums

Incident Type: Threat intel ingestion

Timeline:

- T0: Dark web monitoring bot downloads new ransomware dump from a .onion site

- T+2 hrs: Archive auto-ingested into internal database for parsing

- T+3 hrs: AI-based scan flags multiple files as likely CSAM

- T+4 hrs: Files quarantined, internal IR team notified

- T+6 hrs: External legal counsel and IWF contacted

Outcome:

No staff were exposed directly. A rapid quarantine protocol prevented legal breach. The .onion site was reported and later seized.

Lesson:

Threat intel feeds are high-risk vectors for CSAM contamination. All automation must include filters, redaction, and strict sandboxing.

Case 4: Internal Employee Discovered with CSAM on Work Laptop

Company: Fortune 500 enterprise

Incident Type: Insider threat investigation

Timeline:

- T0: Endpoint DLP flags unusual file transfers to USB device

- T+1 hr: Device confiscated, image captured by DFIR team

- T+3 hrs: Several files hash-match known CSAM, system isolated

- T+5 hrs: HR, legal, and federal authorities looped in

- T+1 day: Employee arrested on-site by law enforcement

Outcome:

The company issued a public statement, increased internal monitoring, and conducted an audit across all departments. Lawsuit threats were mitigated due to proactive internal controls and transparency.

Lesson:

You’re responsible not just for external threats, but for what your people do with your hardware. Regular endpoint audits are not optional.

Summary: Patterns Across Incidents

| Pattern | Implication |

| Early detection by DLP or hash match | Prevents human exposure and legal liability |

| Clear escalation procedures | Reduce chaos and protect staff |

| Rapid involvement of legal & law enforcement | Essential to maintaining compliance |

| Psychological fallout for staff | Must be addressed proactively |

Every one of these incidents followed a tight timeline: from discovery to escalation within 1–6 hours. Delay = liability. The faster your response, the safer your team.

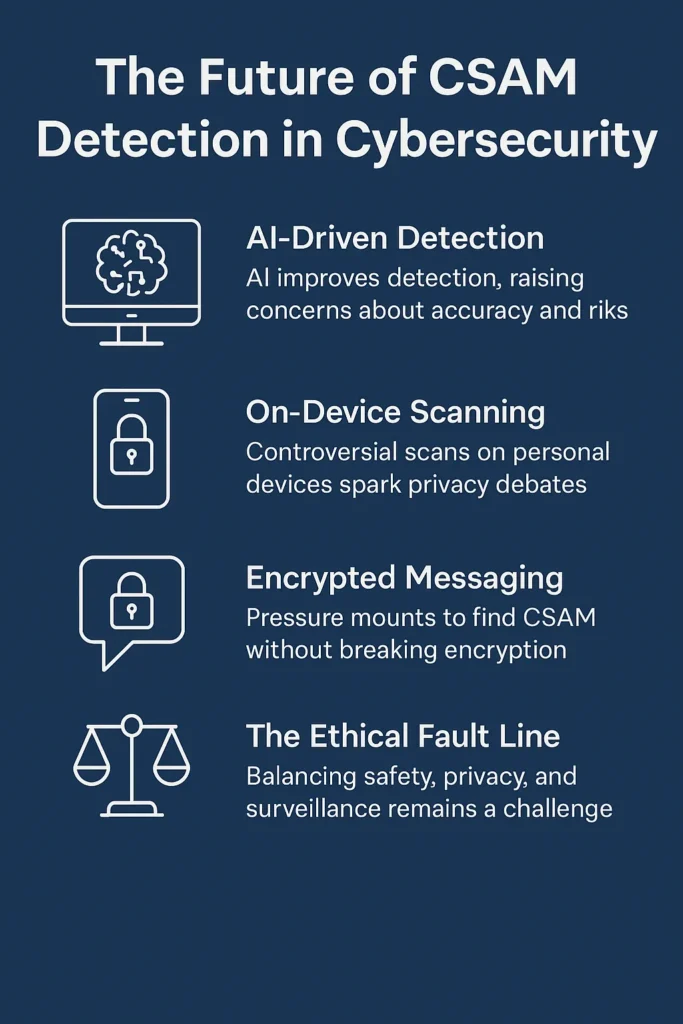

The Future of CSAM Detection in Cybersecurity

As digital ecosystems expand, so does the threat and complexity of CSAM. But while governments and platforms push for more aggressive scanning tech, cybersecurity pros are caught in the middle: part guardian, part witness, and increasingly, part target.

The next decade will reshape how CSAM is detected, who gets exposed, and what’s sacrificed in the name of safety.

1. AI-Driven Detection: More Power, More Risk

The biggest evolution in CSAM detection is artificial intelligence. AI models trained on massive image datasets can now identify patterns far beyond hash matches, detecting brand-new CSAM, altered media, and even suspicious pose or context.

Emerging Tools:

- Google CSAI Match+: Goes beyond matching by analyzing visual context

- Meta’s AI moderation engine: Used to flag borderline content in real time

- Thorn’s Spotlight: Used by law enforcement to reduce time spent manually reviewing material

Why It Matters:

- Pros: AI can catch what humans and hash sets miss

- Cons: False positives, black-box logic, and potential bias introduce serious risks

Reality: A mistagged image could implicate an innocent analyst—or destroy someone’s digital reputation. Trusting AI without human oversight is asking for lawsuits.

2. On-Device Scanning: Apple’s Failed Experiment

In 2021, Apple proposed scanning user photos before they were uploaded to iCloud. Their plan: match images to CSAM hash databases directly on the device.

Backlash:

- Privacy advocates called it pre-crime surveillance

- Security experts warned it would create a global backdoor for authoritarian regimes

- Apple dropped the plan after massive public outcry

Lesson Learned:

Even good intentions can pave the road to digital authoritarianism. Once on-device scanning is normalized, it’s just one config tweak away from scanning for political dissent, not CSAM.

3. Encrypted Messaging: The Next Battleground

End-to-end encrypted platforms (like WhatsApp, Signal, and Proton) are under mounting pressure to implement CSAM detection even if it means compromising encryption.

The Stakes:

- Law enforcement argues: CSAM thrives in the shadows of encryption

- Privacy groups argue: Weakening encryption endangers activists, whistleblowers, and journalists

Likely Outcome:

Tech companies may be forced to choose: comply with CSAM detection mandates or resist and risk being banned in certain jurisdictions. Expect fragmented policies by country and an underground boom in “zero-knowledge” comms.

4. The Rise of Federated Hash Databases

Currently, hash databases like NCMEC’s or Project VIC are restricted to law enforcement and vetted partners. But as more orgs encounter CSAM, there’s pressure to make these databases more accessible.

What’s Coming:

- Federated access for vetted SOC teams and IR firms

- Tiered trust models (read-only hash queries vs full DB access)

- Cross-platform syncing to catch CSAM across services (Google, Microsoft, AWS)

Danger: These systems must be secure, auditable, and never used to expand surveillance scope beyond CSAM.

5. The Ethical Fault Line: Detection vs Dystopia

What starts as CSAM detection can easily slide into digital overreach:

- Mass surveillance disguised as child protection

- Corporate liability turned into mass file scanning

- False flags leading to criminal accusations

The Cybersecurity Dilemma:

- Stay compliant without becoming complicit

- Protect users without destroying privacy

- Detect threats without deputizing analysts into law enforcement

Final Thoughts

What is CSAM in cybersecurity? It’s not just a definition, it’s a digital tripwire that can explode without warning.

CSAM (Child Sexual Abuse Material) may appear during malware analysis, threat intelligence gathering, or forensic recovery, and when it does, your next move matters more than anything you’ve done before.

You’ve seen how:

- CSAM shows up in real-world cybersecurity environments

- Detection tools walk a fine line between precision and false positives

- Mishandling CSAM, even accidentally, can lead to criminal charges

- AI, surveillance, and legal pressure are rewriting the rules of detection

If you work in cybersecurity and don’t have a CSAM protocol, you’re not just unprepared; you’re exposed.

Action Steps:

- Train your team to recognize, report, and escalate CSAM legally

- Implement tools that detect without exposing staff

- Lock down access with role-based controls

- Push back against overreach disguised as child safety

Because the real answer to what CSAM is in cybersecurity isn’t just about law or ethics, it’s about survival. Yours, your team’s, and your organization’s.

Frequently Asked Questions

Is scanning encrypted messages or files for CSAM legal?

Laws vary by region. Some governments advocate for client-side scanning (e.g., Apple’s abandoned plan), but privacy groups argue this undermines encryption and user rights. Organizations must tread carefully and stay compliant with local regulations.

Are cybersecurity teams legally required to report CSAM?

In most regions, yes. Mandatory reporting laws exist in the U.S., EU, UK, and Canada. Failing to report CSAM can result in criminal liability for individuals and organizations.

What is the Cybersecurity Audit Model (CSAM)?

The Cybersecurity Audit Model (CSAM) is a structured framework used to conduct cybersecurity audits across organizations or government entities. It helps assess and quantify an entity’s cybersecurity maturity, assurance level, and overall readiness in response to digital threats.

What is CSAM detection?

CSAM detection refers to the technology Apple developed to identify users who store known Child Sexual Abuse Material in their iCloud Photos. It works by matching uploaded content against a secure database of known illegal material, allowing Apple to report offenders while maintaining user privacy through on-device scanning.

Can AI reliably detect CSAM in cybersecurity environments?

AI can assist in detection, but should never be relied on as the sole decision-maker. False positives and black-box models can lead to legal and ethical problems. Always confirm with legal procedures and human oversight.